Hi, Readers.

A few days ago we discussed how to export data from Business Central to a csv file with Power Automate (No customization) – JSON to csv.

Today I would like to talk about how to import data from a csv file to Business Central with Power Automate in details.

Power Automate is a service that helps you create automated workflows (or flows) between apps and services, like Business Central. With Business Central, you’re given a license to Microsoft Power Automate. This license lets you use your Business Central data as part of a workflow in Microsoft Power Automate. You create flows and connect to your data from internal and external sources through the Business Central connector.

Sometimes we need to regularly import data to BC. If we customize the extension, since we cannot access the local folder, we may need to use complex methods such as Azure Functions, Graph API or other external services. Today I’d like to share a simple practice using Power Automate that I hope will give you some help.

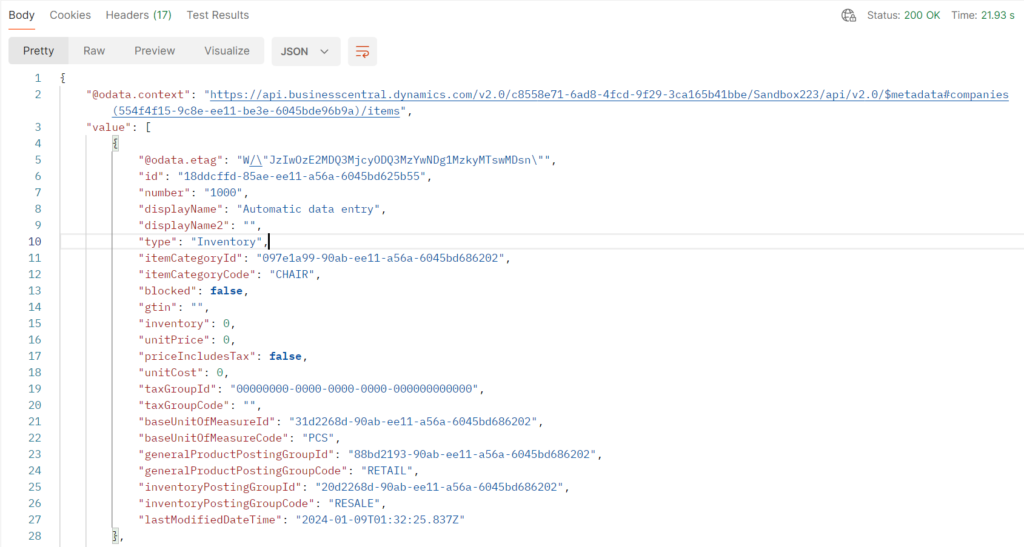

This time we are using the standard item API. Here is an example of the response, more details: Get items (What is returned is data in json format)

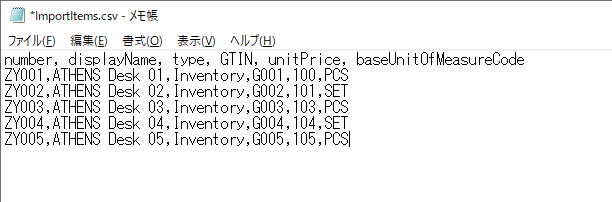

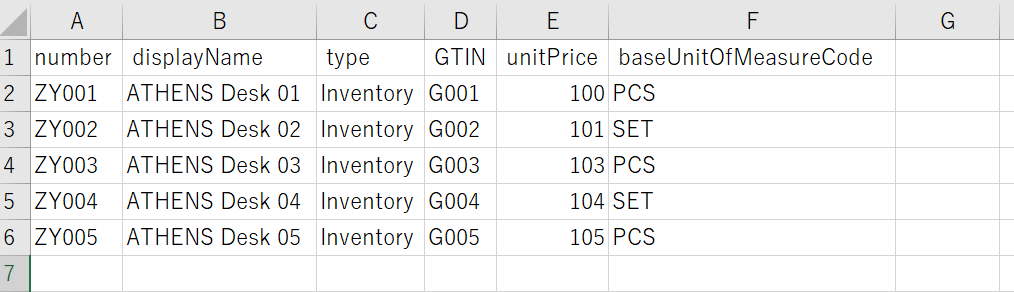

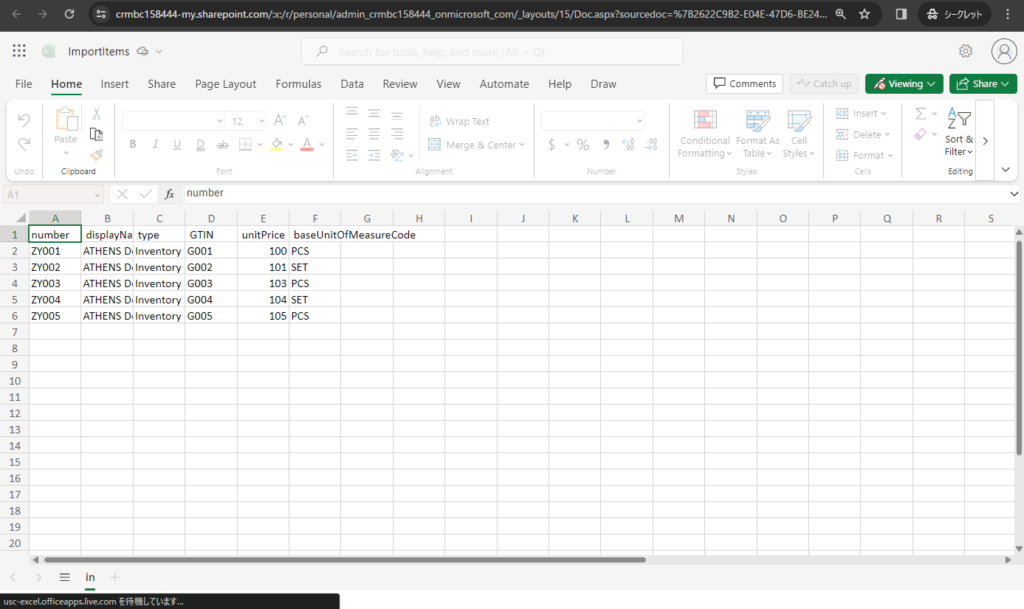

Test csv file: Please be careful not to leave any blank lines.

In Text Editor:

In Excel:

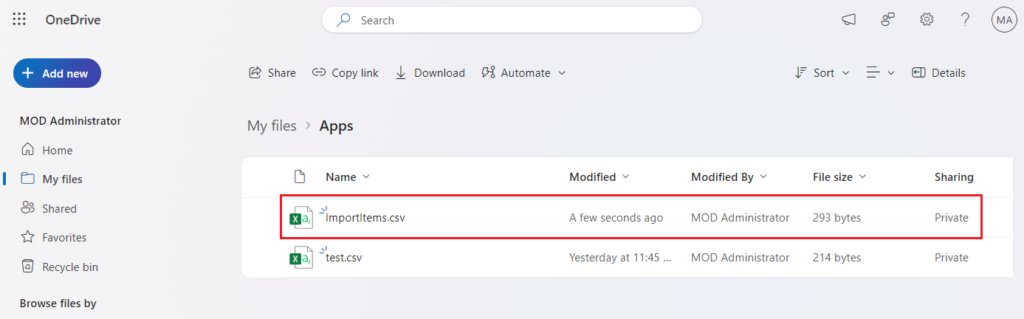

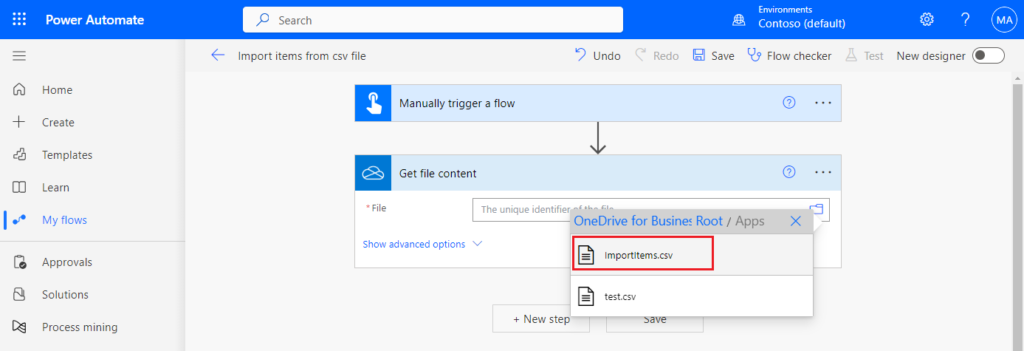

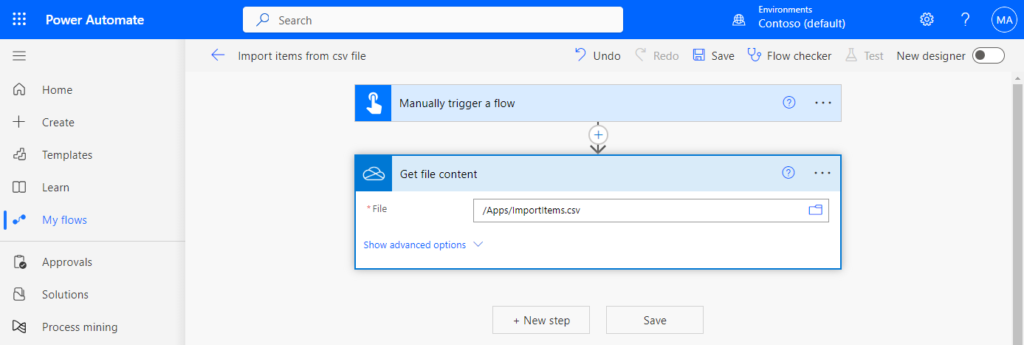

This time I saved it in OneDrive, you can also save it in Sharepoint, or any other location that Power Automate can access.

Okay, let’s start.

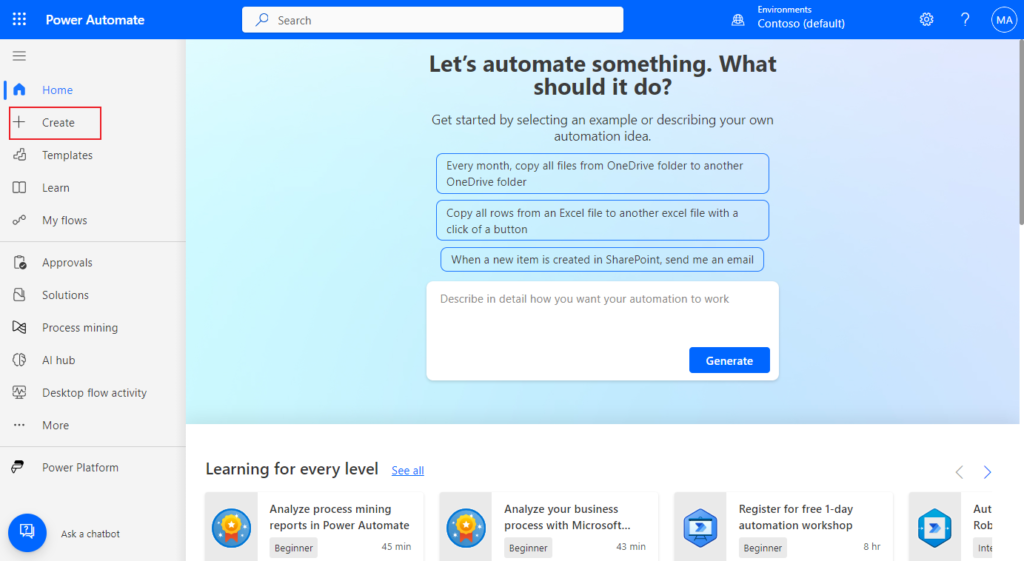

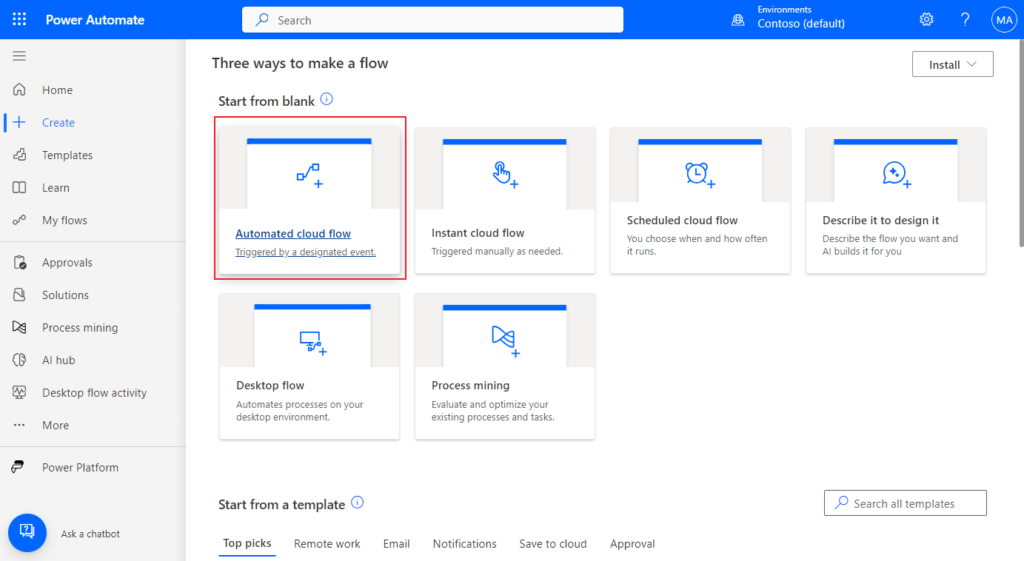

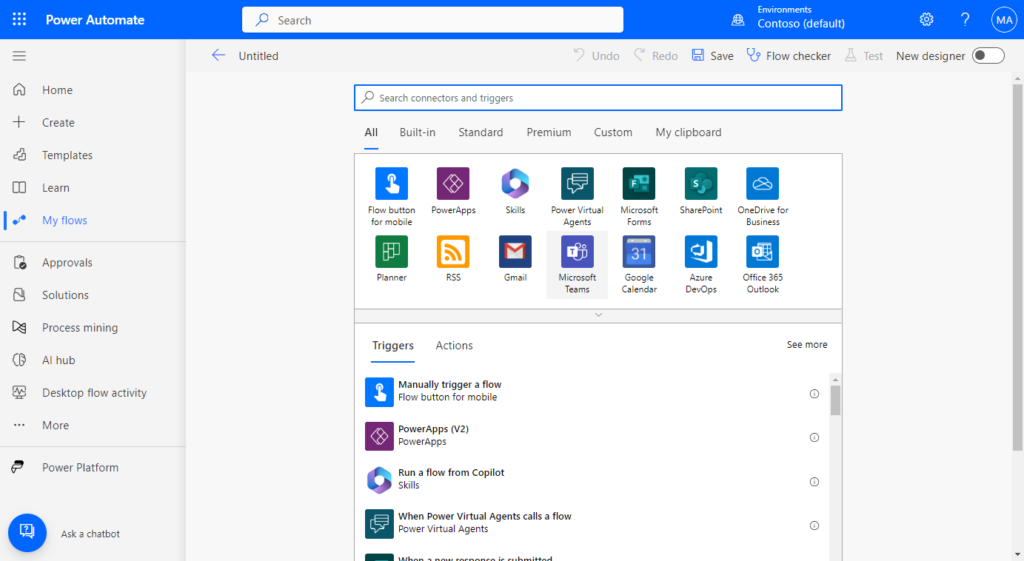

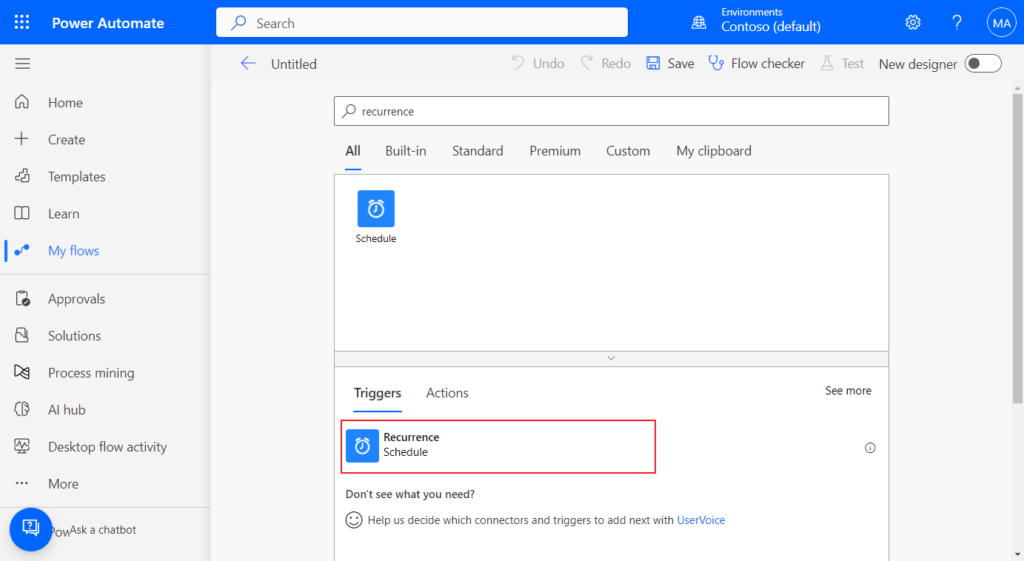

Sign in to Power Automate, and create a new Automated cloud flow.

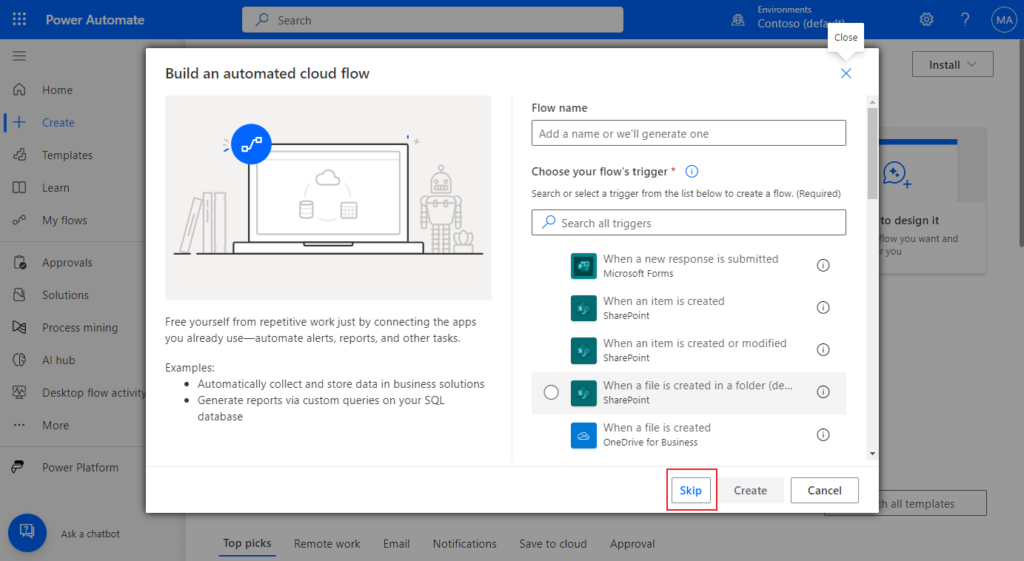

Choose Skip. (You can also set the flow name and flow’s trigger here)

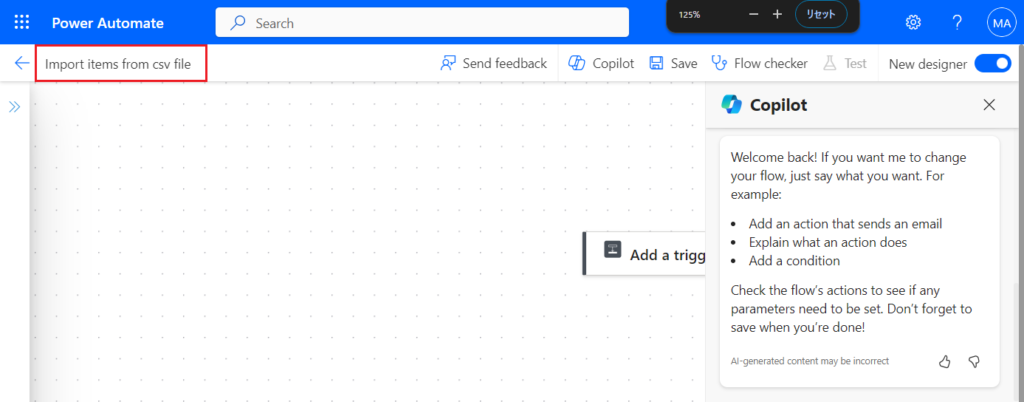

Give the flow a name.

For example, Import items from csv file

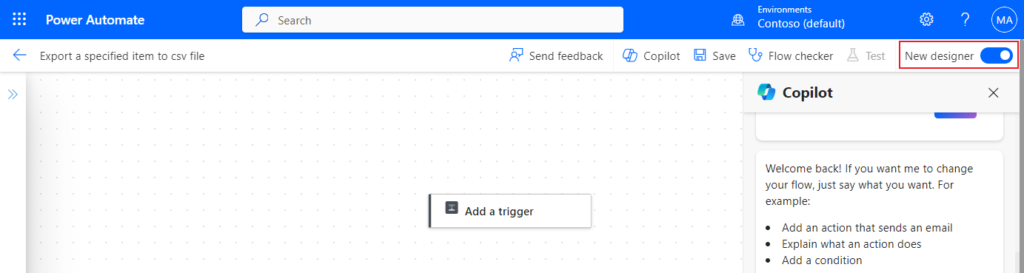

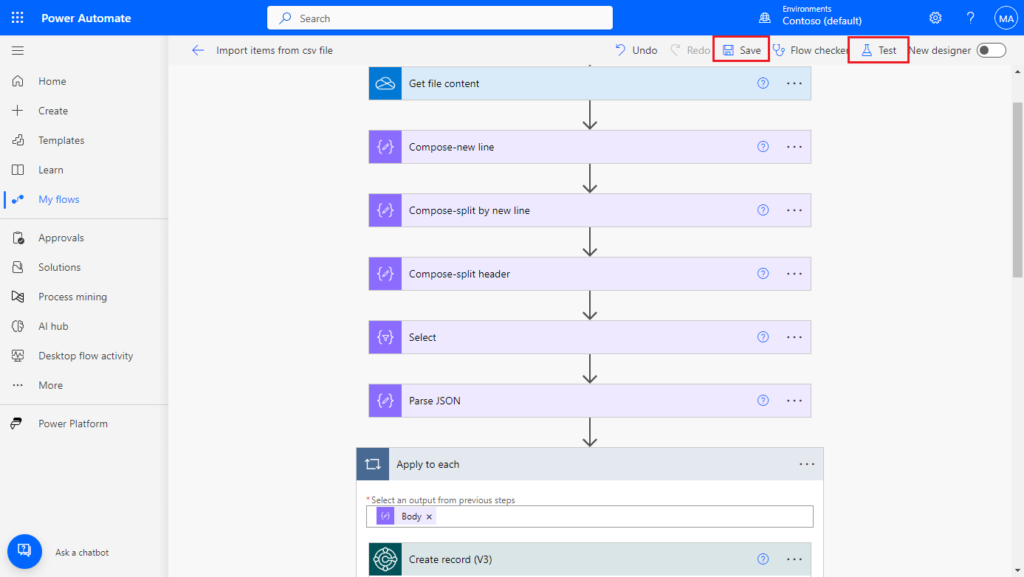

PS: Disable New designer in the upper right corner to return to the previous designer.

In this post, I shared it on the previous designer and the new one is the same.

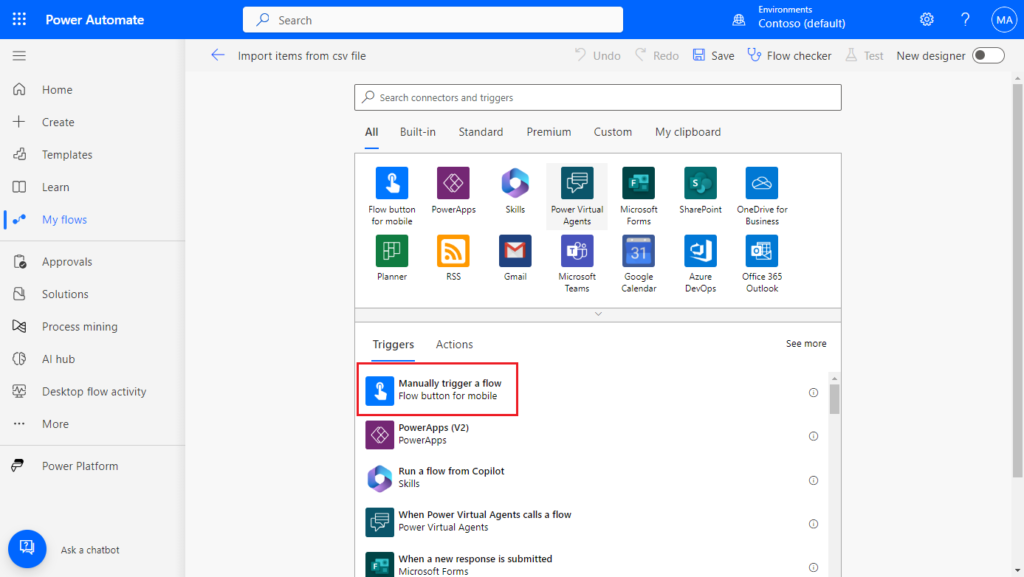

In this post, we start the flow manually, so click “Manually trigger a flow“

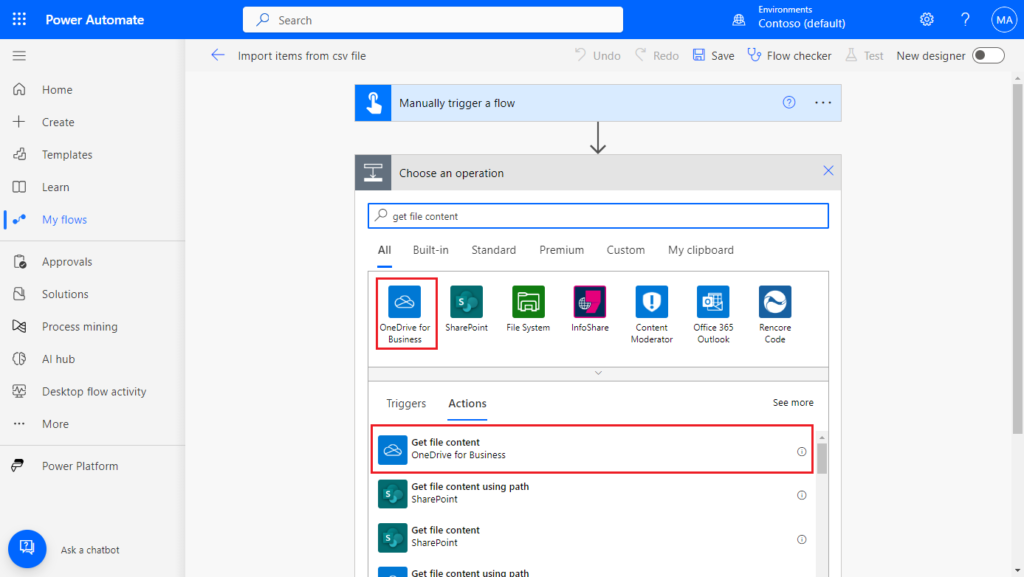

We need to read the csv file from OneDrive first. Click New step. Search for Get file content (OneDrive for Business), and click on it.

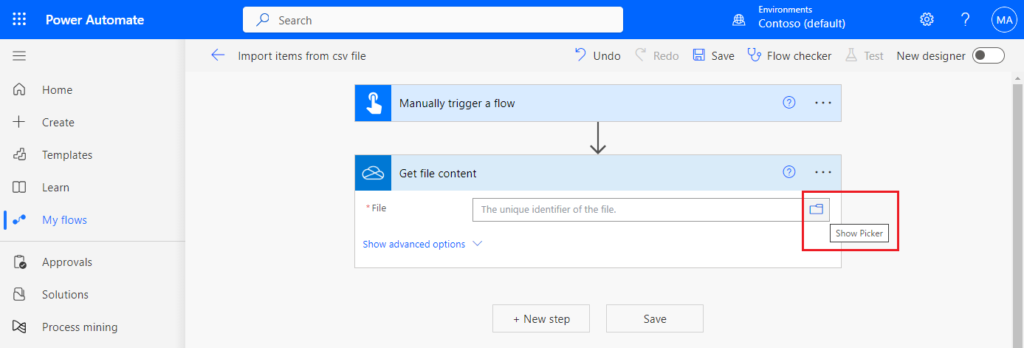

Click the file icon to select the file.

Then we need to convert the data in csv file into json format to facilitate reading into BC. This is the difficulty in importing csv file. Below are several key functions we will use this time. It is recommended to read MS learn (docs) first.

- Split function: Splits a text string into a table of substrings.

- Replace and Substitute functions: Replace a portion of a string of text with another string.

- Skip function: Remove items from the front of a collection, and return all the other items. (This function is not found in the Power Platform documentation, the link is for Azure Logic Apps)

First we need to split the lines. (Please note that this way for reference only, you can improve it according to your own needs)

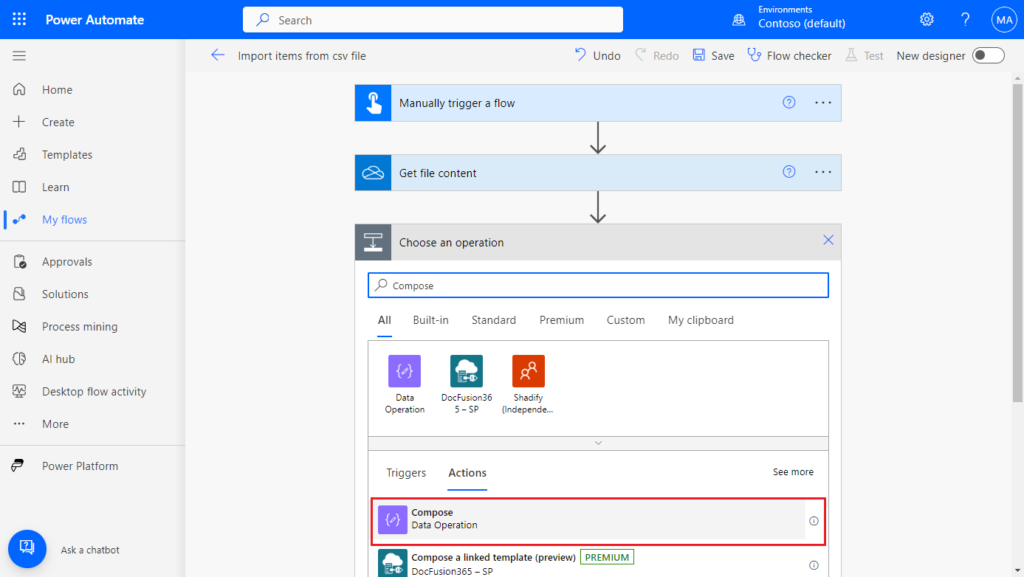

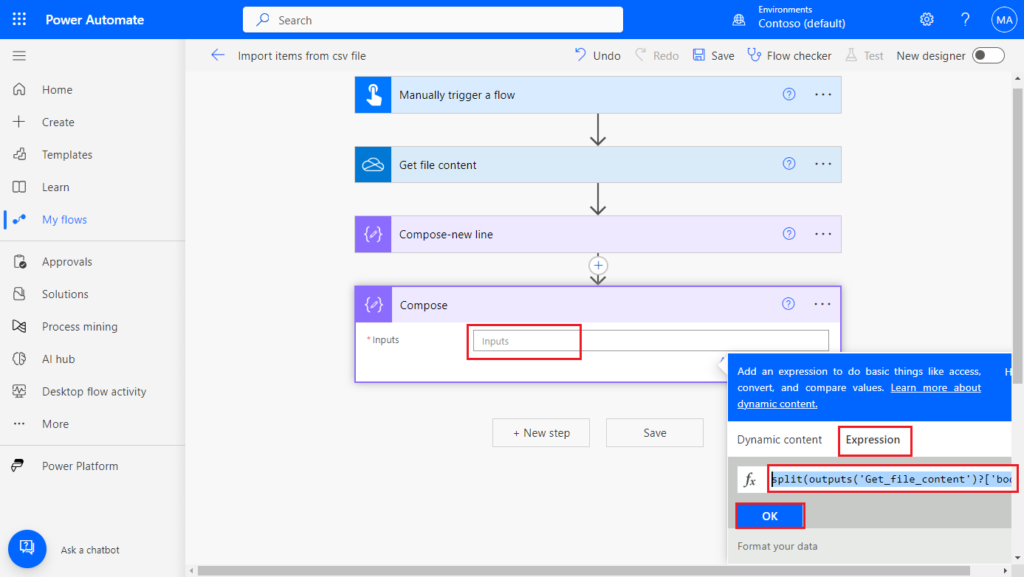

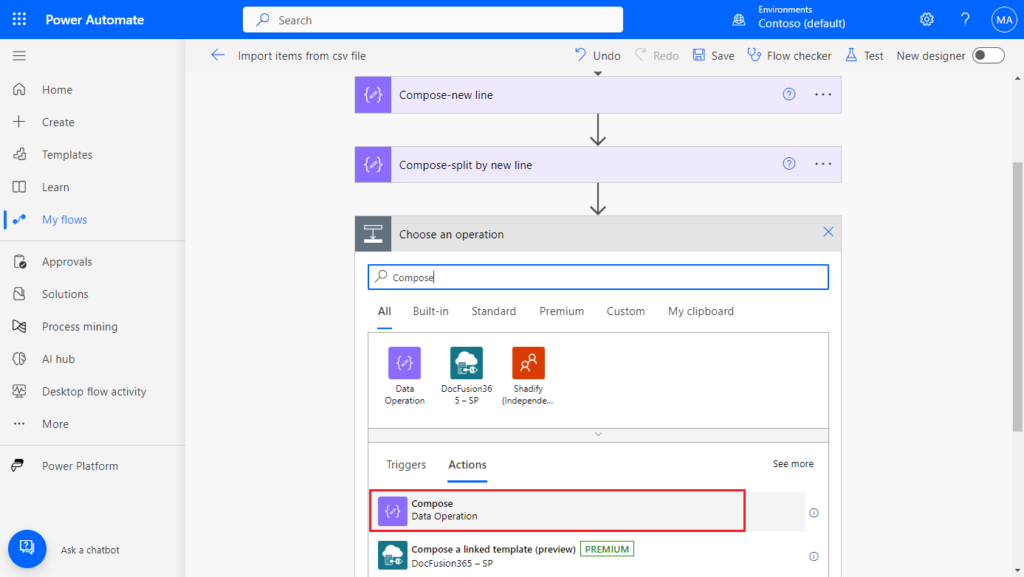

Click New step. Search for Compose, and click on it.

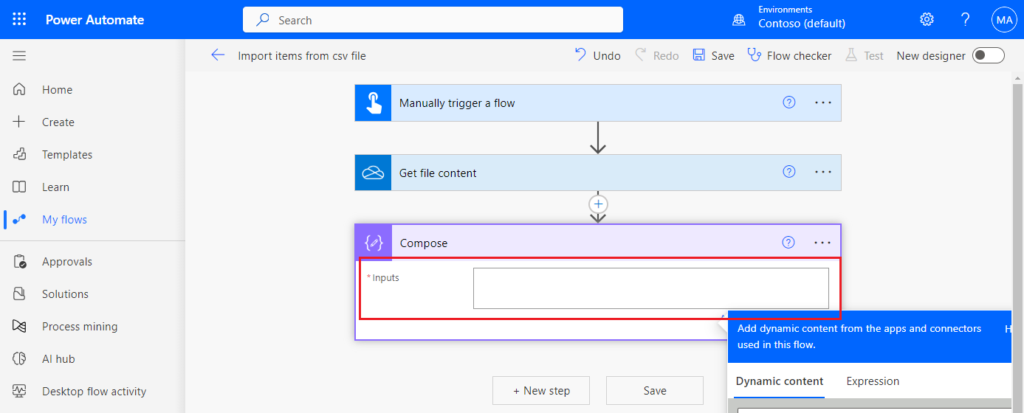

Enter an “Enter” key in the Inputs. Use this carriage return character to split lines in csv.

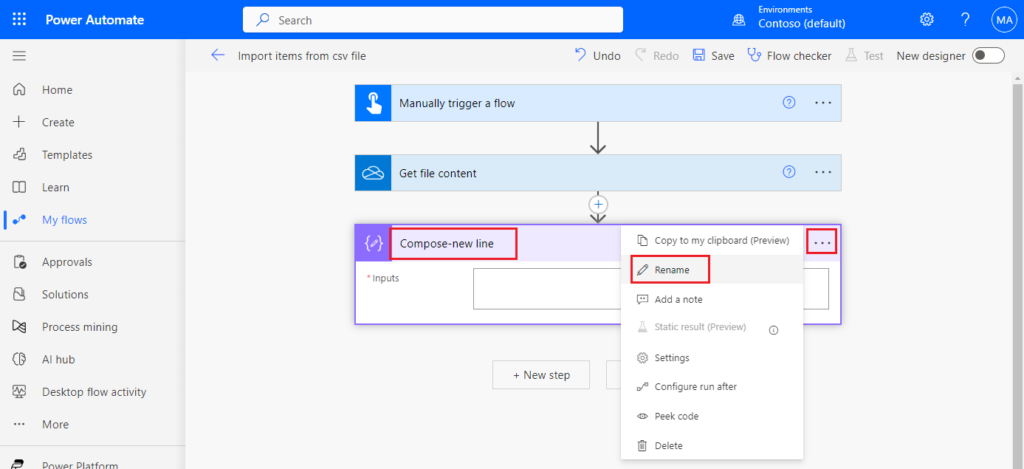

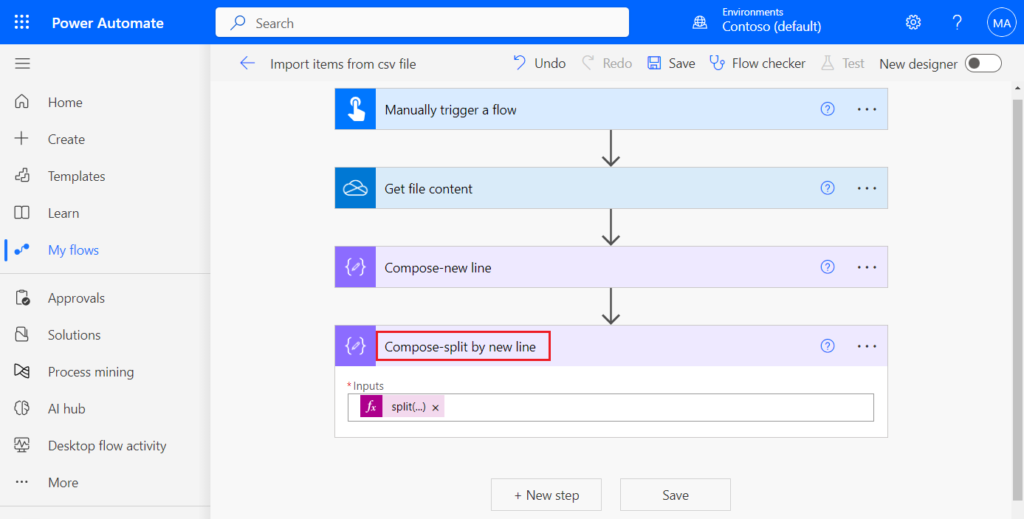

We can choose … -> Rename to rename the step. The name of the step will be used in the expression for the following steps, so please try to be as concise as possible. For example, Compose-new line

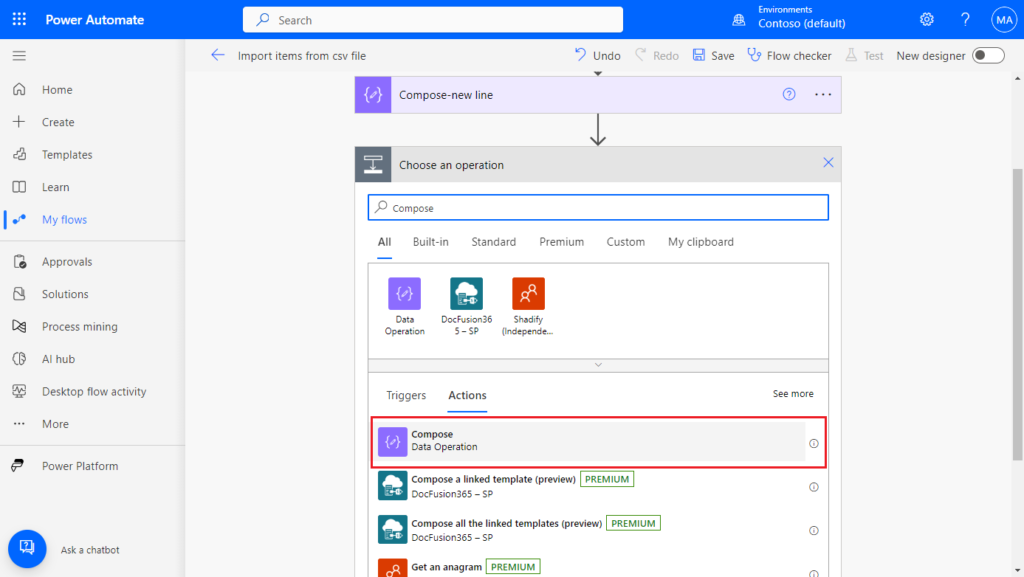

Click New step. Search for Compose, and click on it.

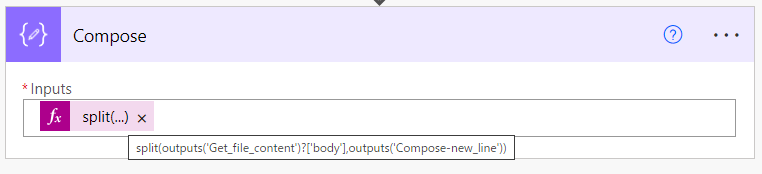

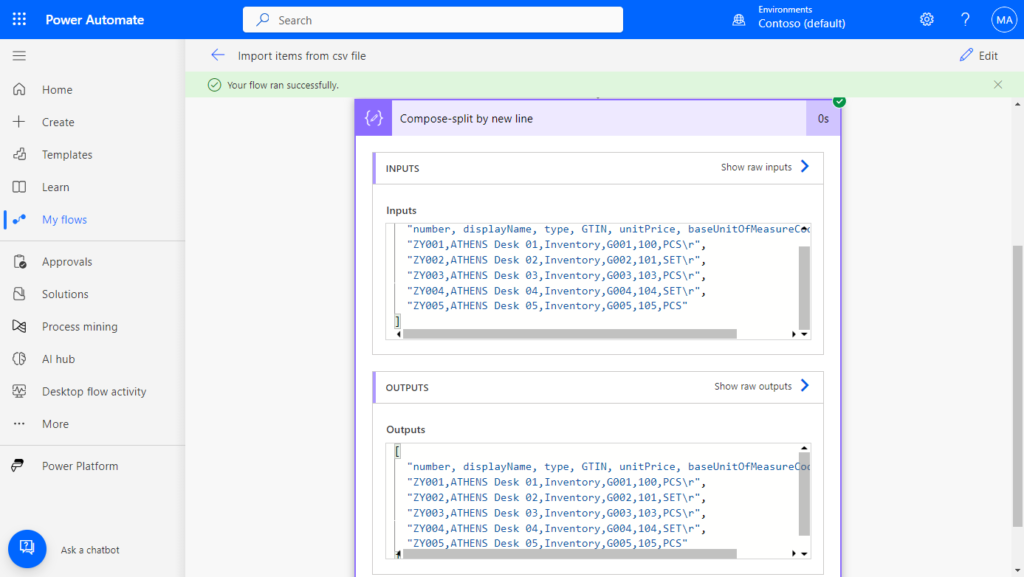

Click Inputs -> Expression, enter the following formula and click OK.

split(outputs('Get_file_content')?['body'],outputs('Compose-new_line'))

Rename it as Compose-split by new line

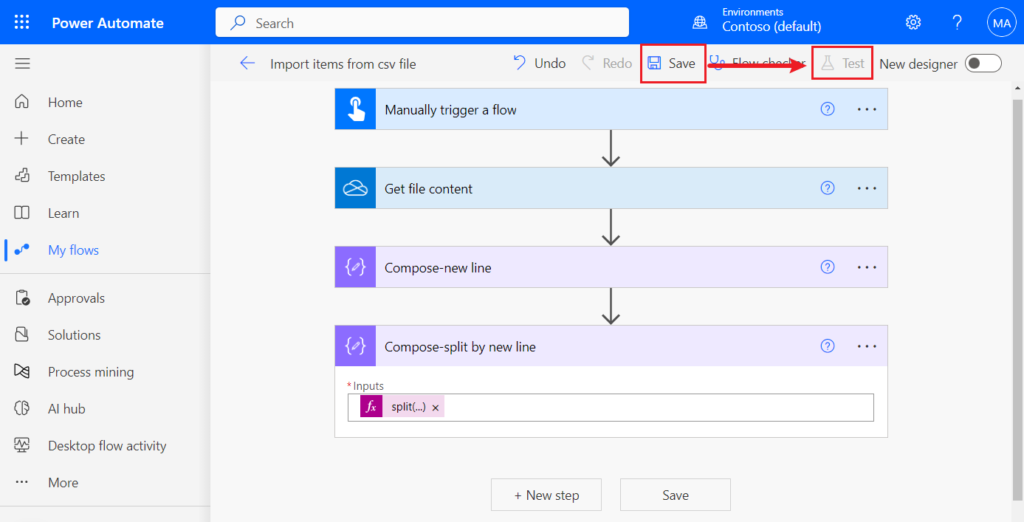

PS: We can save the flow and click Test to test it.

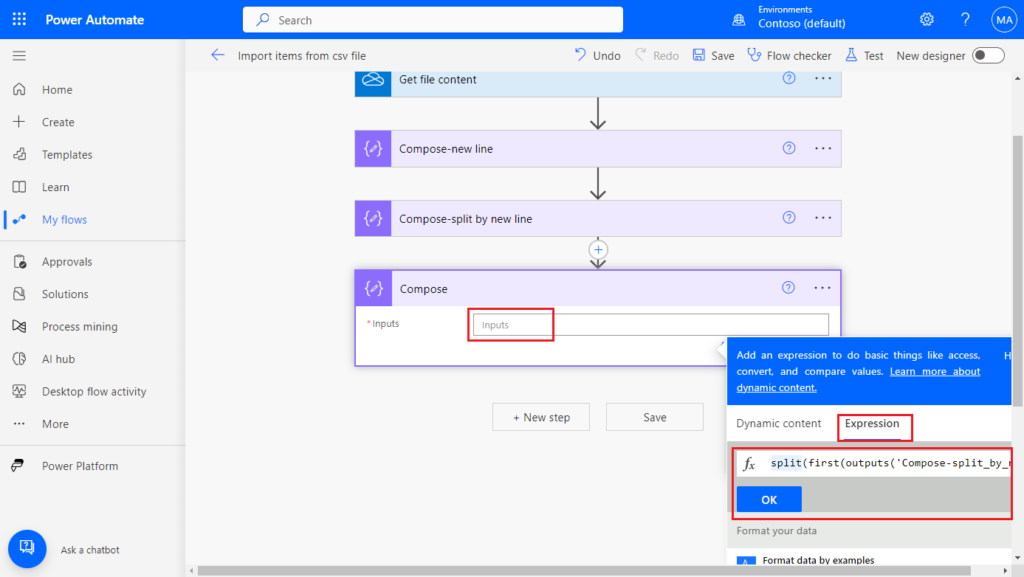

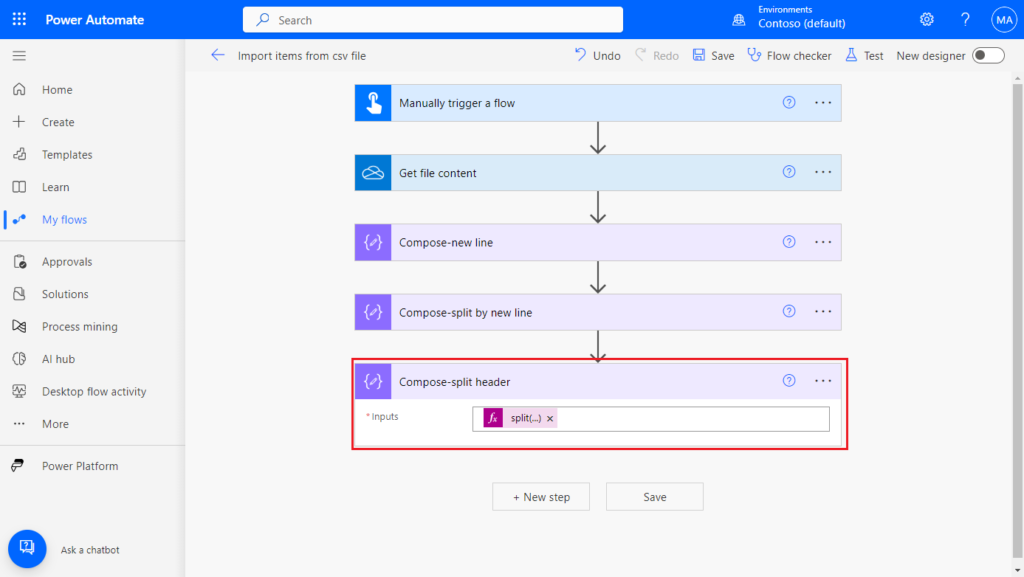

Next, since my csv file contains headers, if you need to use the text/title in the header, you can extract the header as follows. (If your csv file does not contain headers or does not require headers, you can ignore this step)

Click New step. Search for Compose, and click on it.

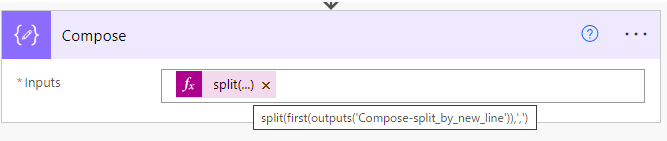

Click Inputs -> Expression, enter the following formula and click OK.

split(first(outputs('Compose-split_by_new_line')),',')

Rename it as Compose-split header

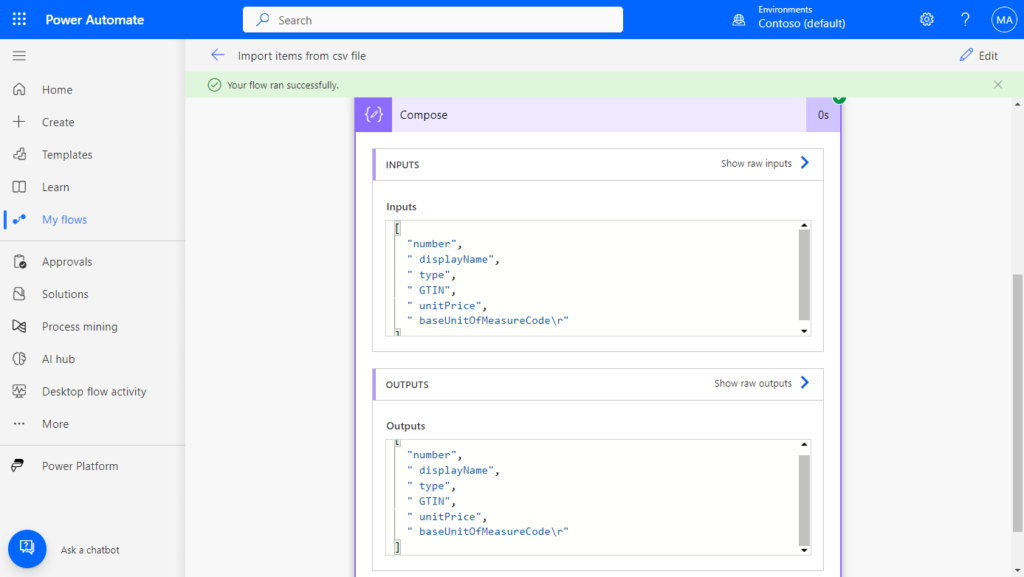

Test:

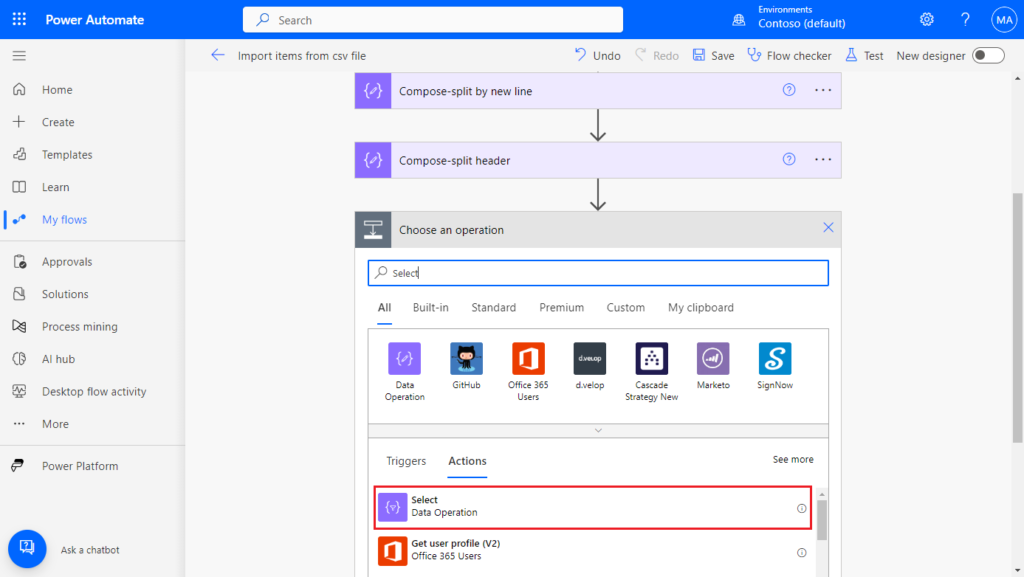

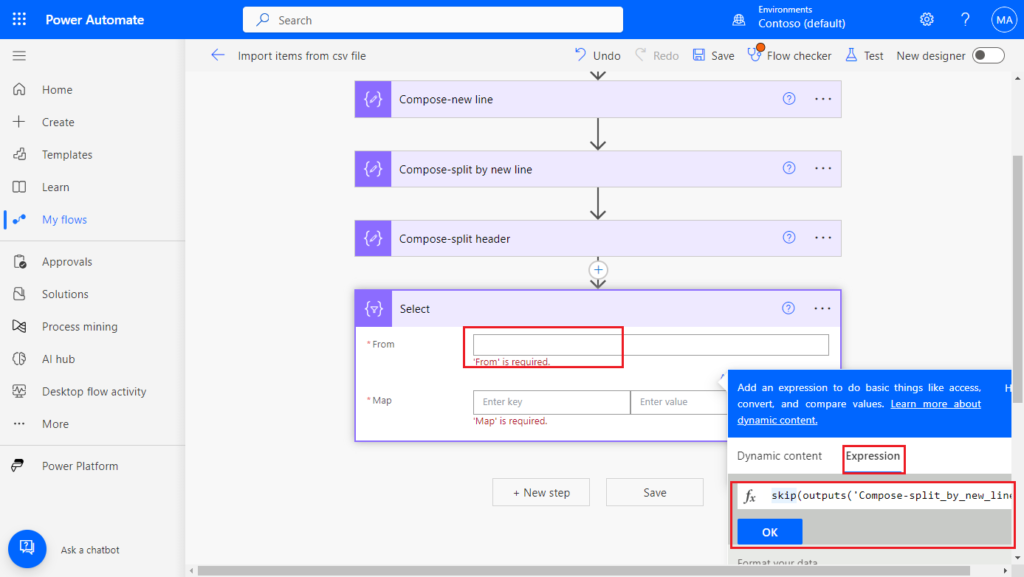

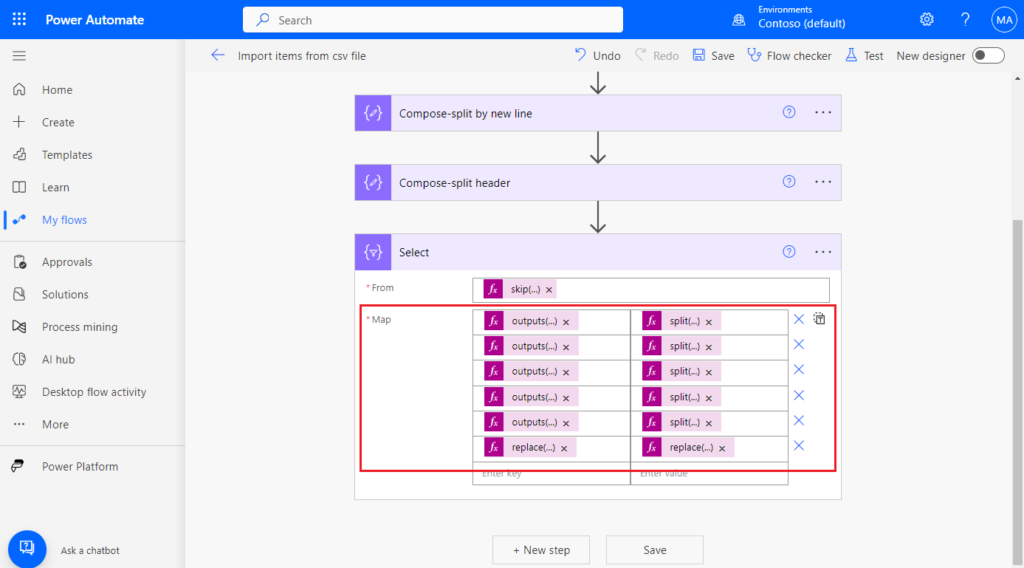

Next we can skip the header and split the data.

Click New step. Search for Select, and click on it.

Click From -> Expression, enter the following formula and click OK. (Skip the first line)

skip(outputs('Compose-split_by_new_line'),1)

Then we need to enter key and value respectively. The left side is the key and the right side is the value. Fields in csv file are similar to option/enum type, begin with the number 0. There are 6 fields in my file, so it is 0~5.

Key: If your csv file does not contain a header, or you have not used the above step (Compose-split header) to split the header, you can enter the fixed key manually. This does not affect importing the data into BC.

outputs('Compose-split_header')[0]

outputs('Compose-split_header')[1]

outputs('Compose-split_header')[2]

outputs('Compose-split_header')[3]

outputs('Compose-split_header')[4]

replace(outputs('Compose-split_header')[5],decodeUriComponent('%0D'),'')Value: Get the value from the split line, the delimiter is ‘,’, you can replace it with the delimiter in your csv file.

split(item(),',')?[0]

split(item(),',')?[1]

split(item(),',')?[2]

split(item(),',')?[3]

split(item(),',')?[4]

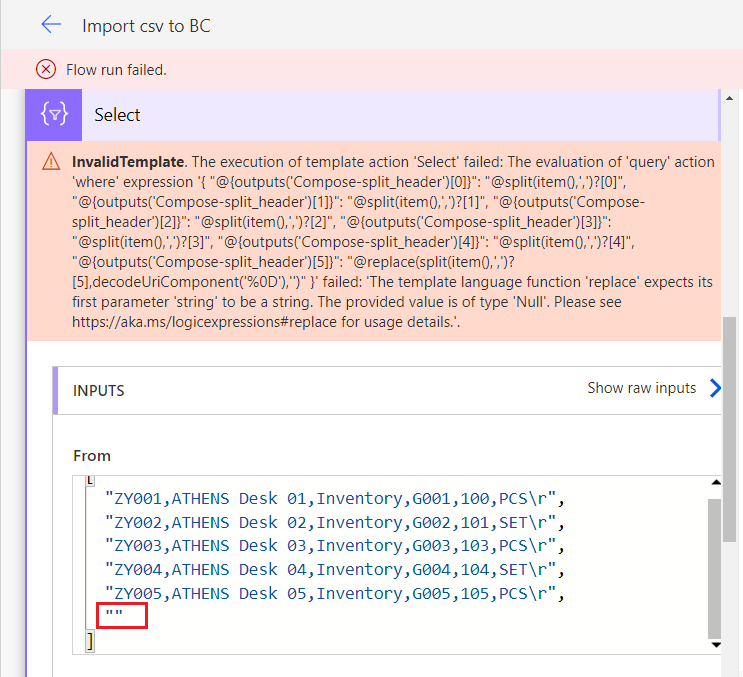

replace(split(item(),',')?[5],decodeUriComponent('%0D'),'')

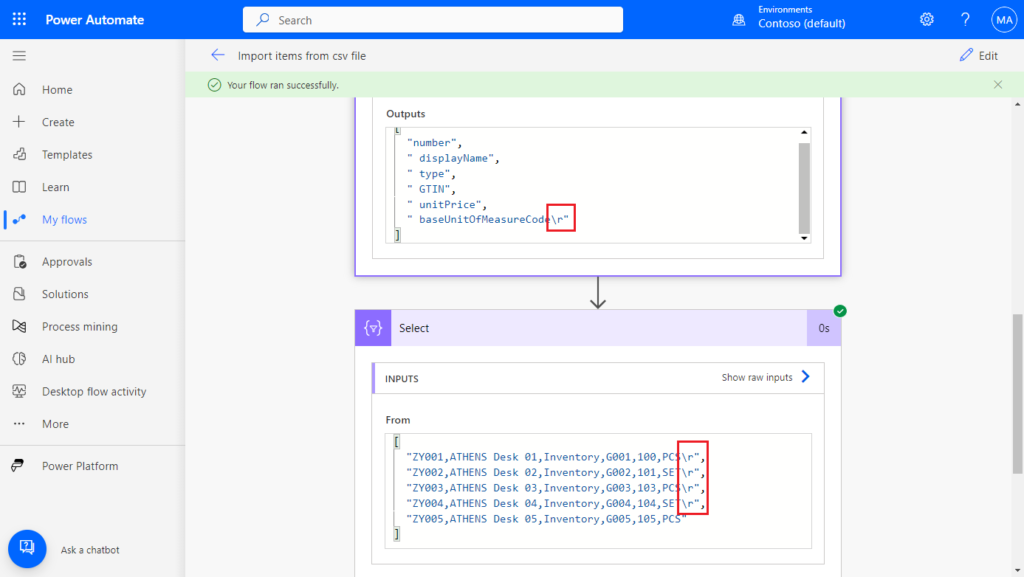

As for why the last one uses Replace function, because except for the last line, the last field of each line contains the Carriage Return ‘\r’, so I replaced it with empty.

More details: URL-encoding

\r (Carriage Return): decodeUriComponent(‘%0A’)

\n (Line Feed): decodeUriComponent(‘%0D’)

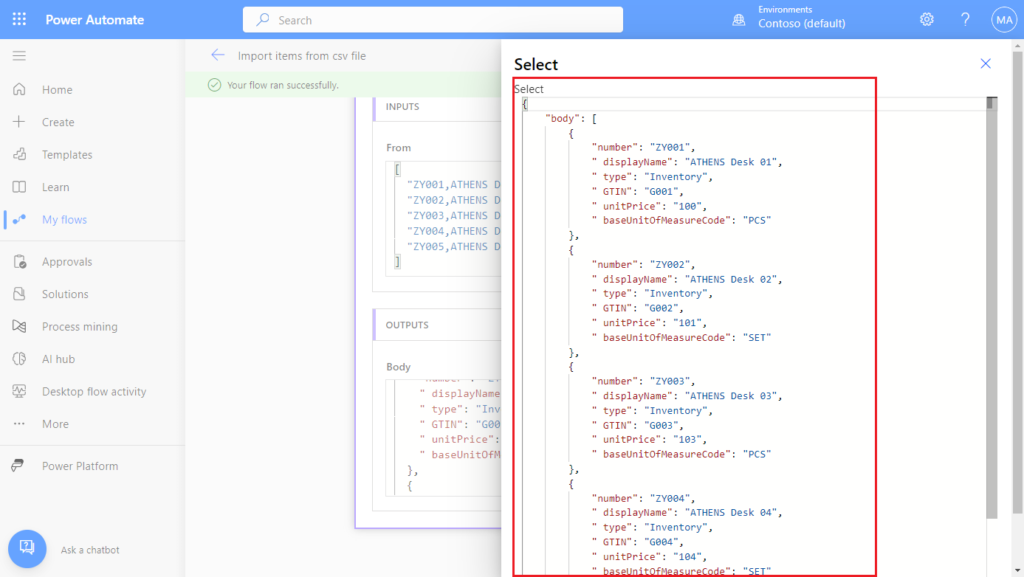

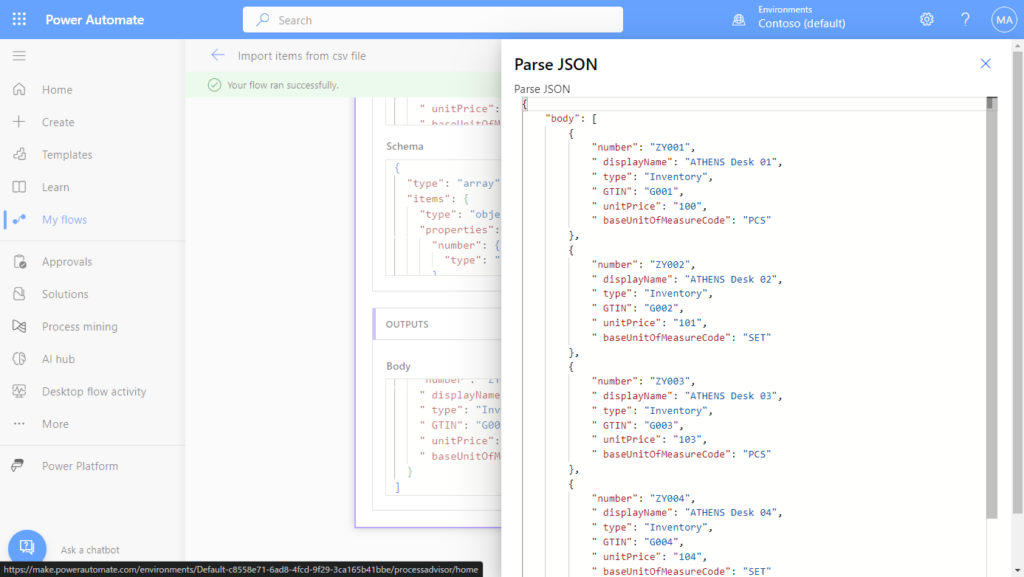

Test:

{

"body": [

{

"number": "ZY001",

" displayName": "ATHENS Desk 01",

" type": "Inventory",

" GTIN": "G001",

" unitPrice": "100",

" baseUnitOfMeasureCode": "PCS"

},

{

"number": "ZY002",

" displayName": "ATHENS Desk 02",

" type": "Inventory",

" GTIN": "G002",

" unitPrice": "101",

" baseUnitOfMeasureCode": "SET"

},

{

"number": "ZY003",

" displayName": "ATHENS Desk 03",

" type": "Inventory",

" GTIN": "G003",

" unitPrice": "103",

" baseUnitOfMeasureCode": "PCS"

},

{

"number": "ZY004",

" displayName": "ATHENS Desk 04",

" type": "Inventory",

" GTIN": "G004",

" unitPrice": "104",

" baseUnitOfMeasureCode": "SET"

},

{

"number": "ZY005",

" displayName": "ATHENS Desk 05",

" type": "Inventory",

" GTIN": "G005",

" unitPrice": "105",

" baseUnitOfMeasureCode": "PCS"

}

]

}PS: If the csv file contains empty lines, an error will occur.

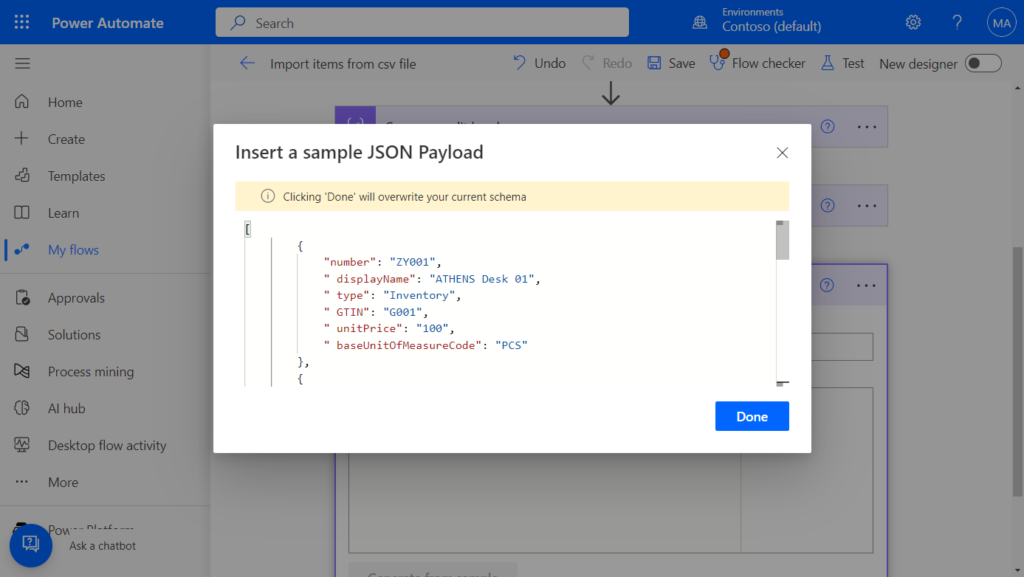

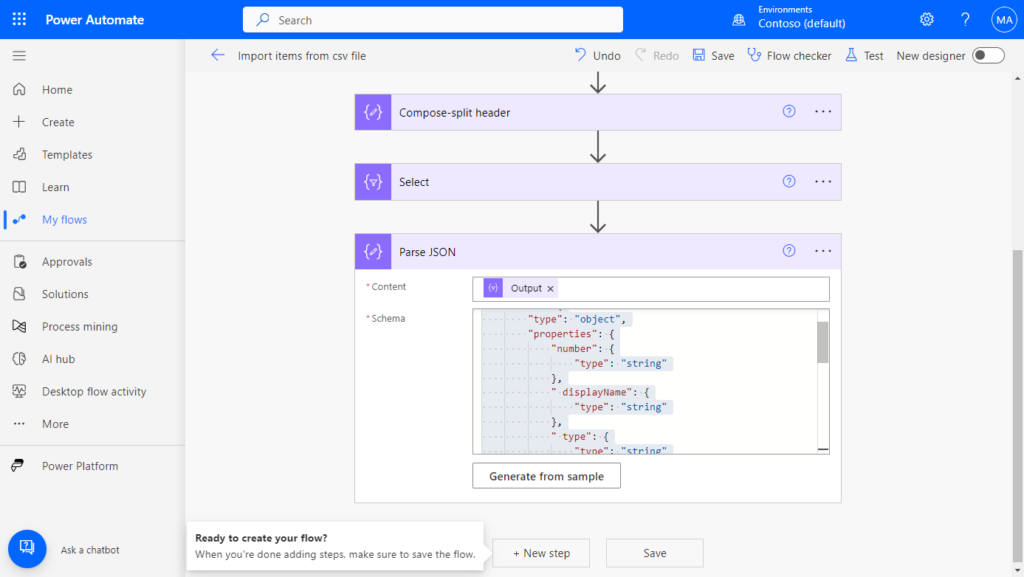

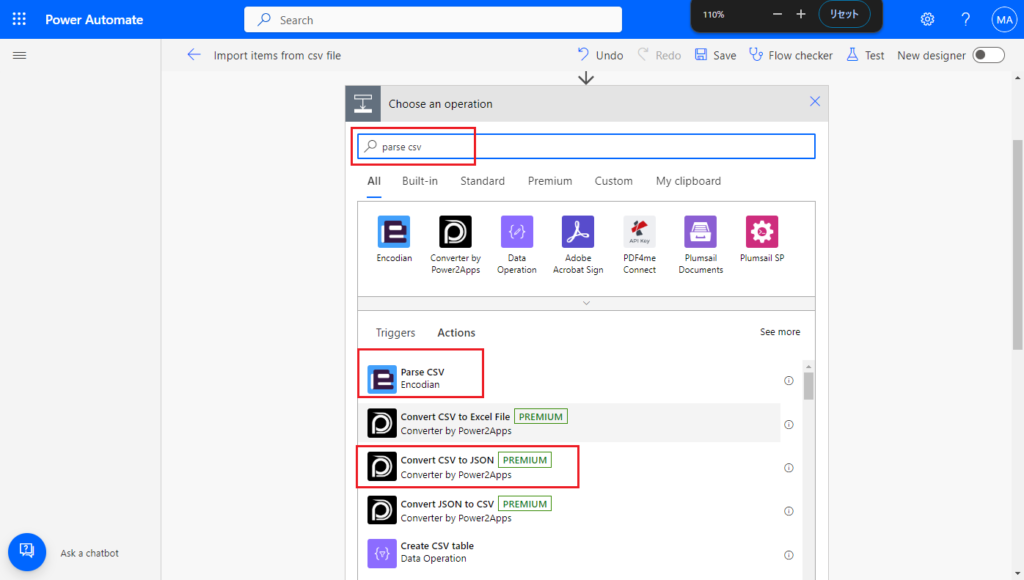

Next we can convert it to JSON format.

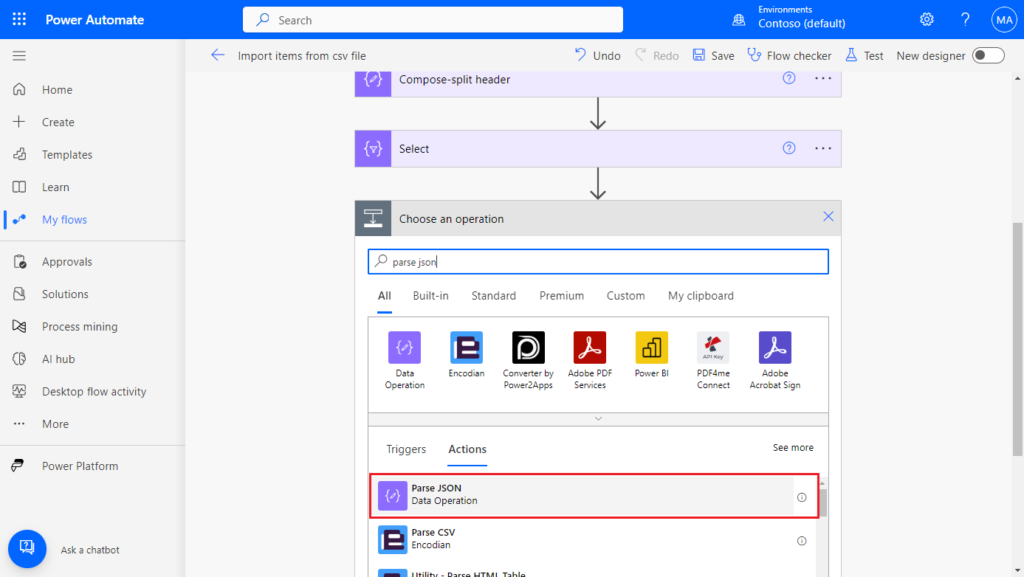

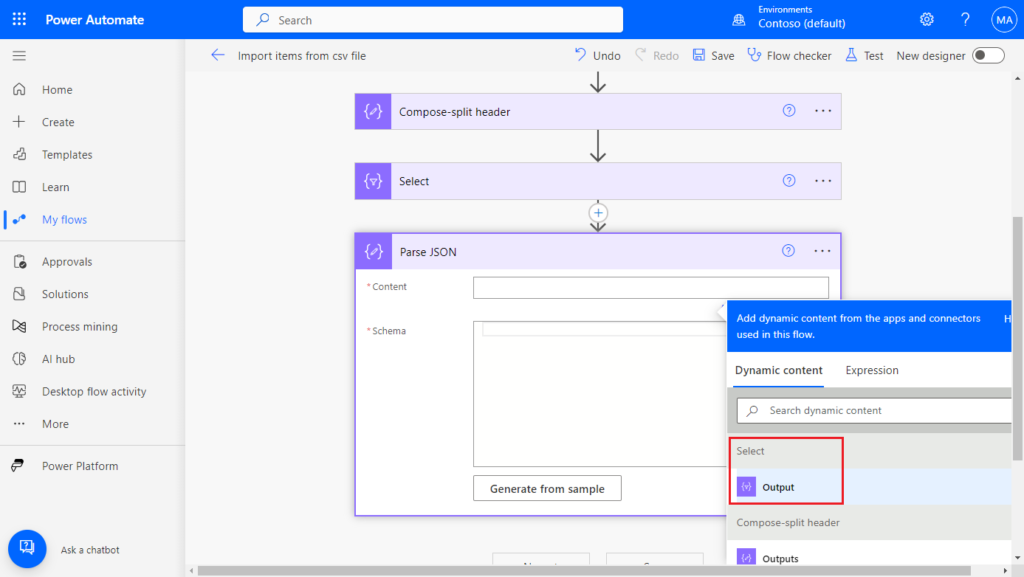

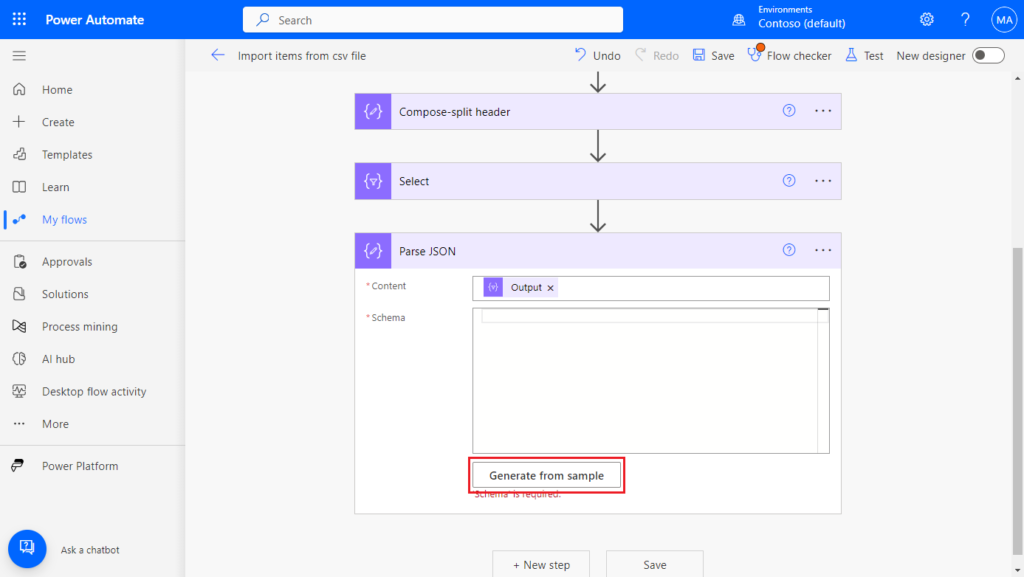

Click New step. Search for Parse Json, and click on it.

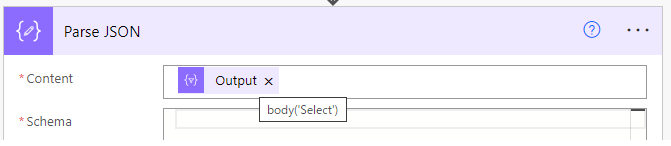

Select Select -> Output in Content.

For Schema, you can also enter it manually, but if the json file is very complex, choose Generate from sample

Paste the json value and click Done. The json value can be obtained from the outputs in the previous step. This does not require complete data. For example, the following three pieces of data are enough.

[

{

"number": "ZY001",

" displayName": "ATHENS Desk 01",

" type": "Inventory",

" GTIN": "G001",

" unitPrice": "100",

" baseUnitOfMeasureCode": "PCS"

},

{

"number": "ZY002",

" displayName": "ATHENS Desk 02",

" type": "Inventory",

" GTIN": "G002",

" unitPrice": "101",

" baseUnitOfMeasureCode": "SET"

},

{

"number": "ZY003",

" displayName": "ATHENS Desk 03",

" type": "Inventory",

" GTIN": "G003",

" unitPrice": "103",

" baseUnitOfMeasureCode": "PCS"

}

]

Test:

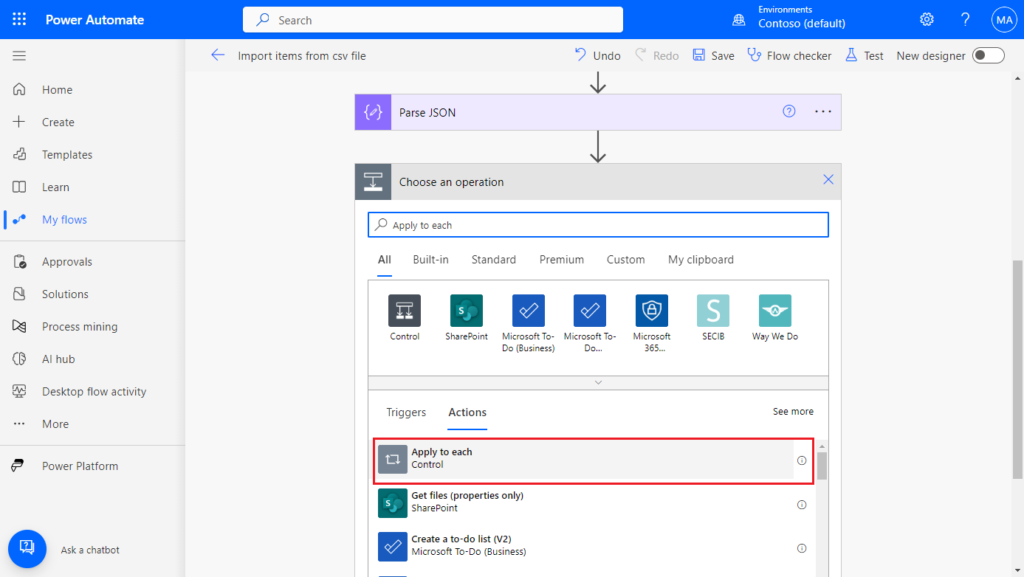

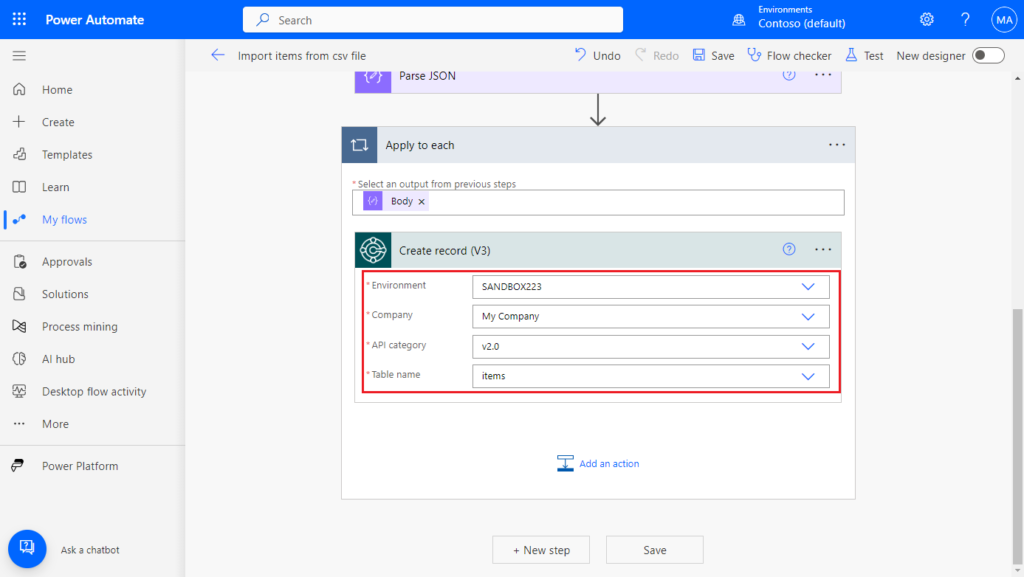

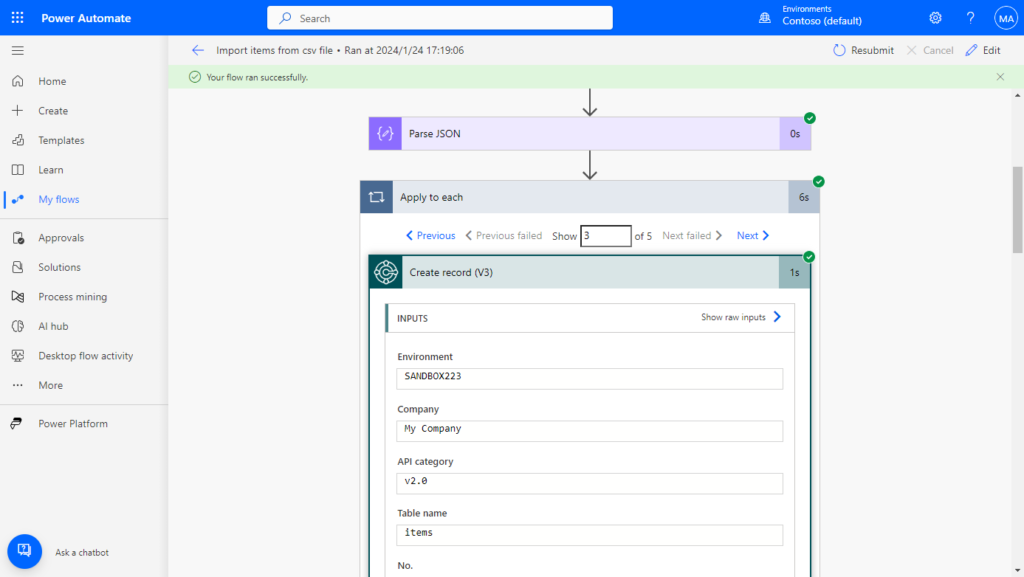

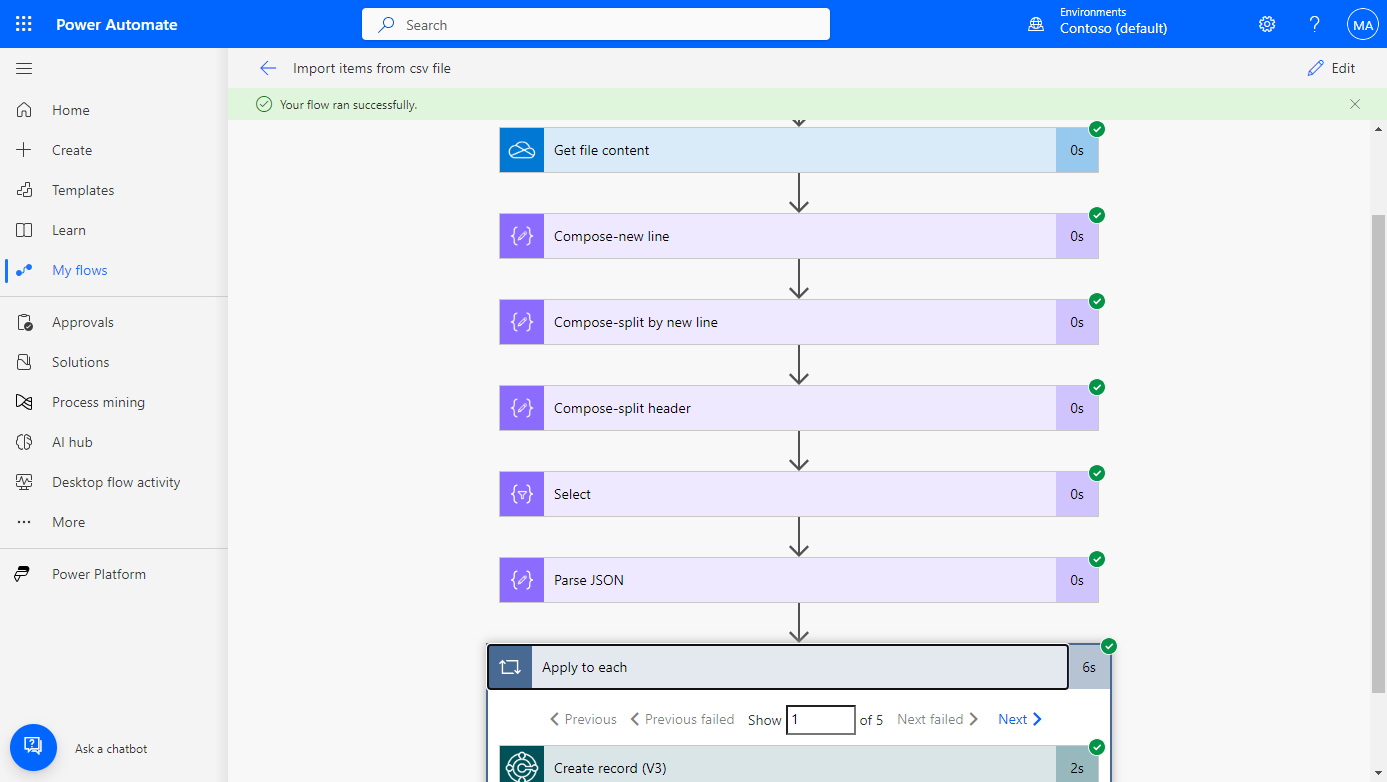

OK, now we can insert data into the BC. However, it is important to note that because there are multiple records in the JSON file, each record needs to be inserted into the BC separately.

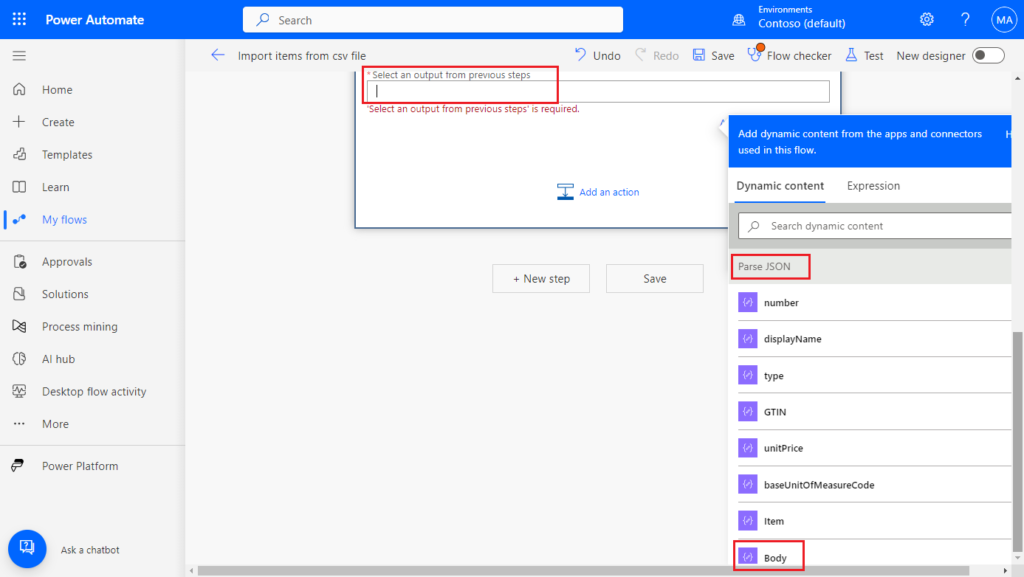

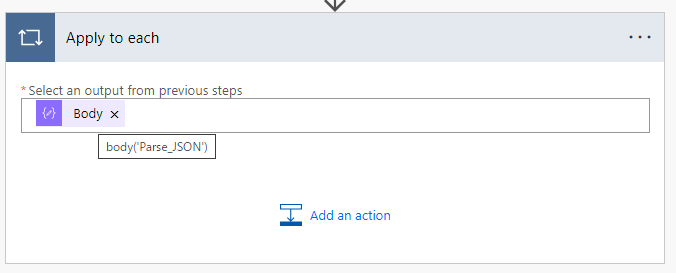

Click New step. Search for Apply to each, and click on it.

Select Parse JSON -> Body

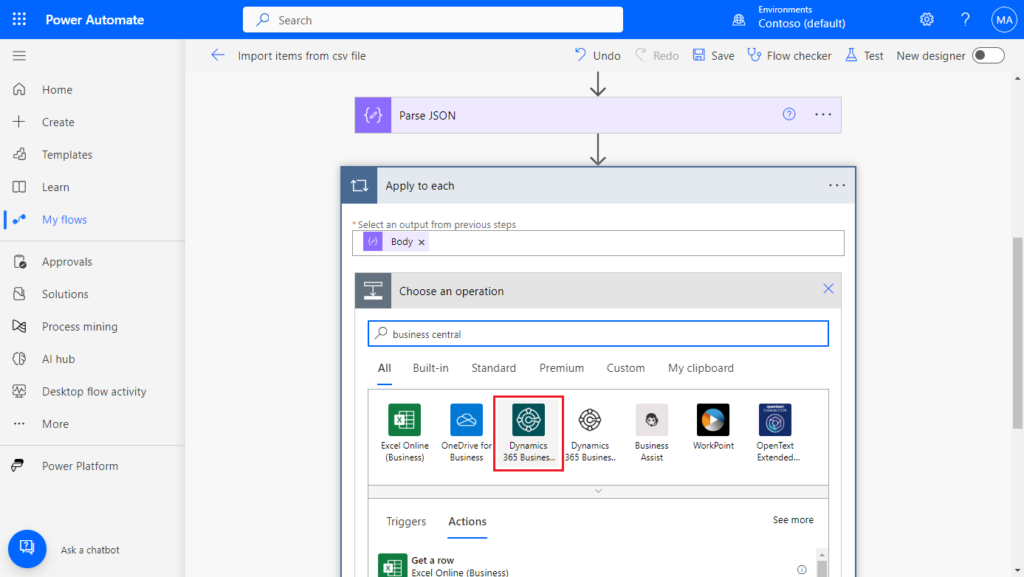

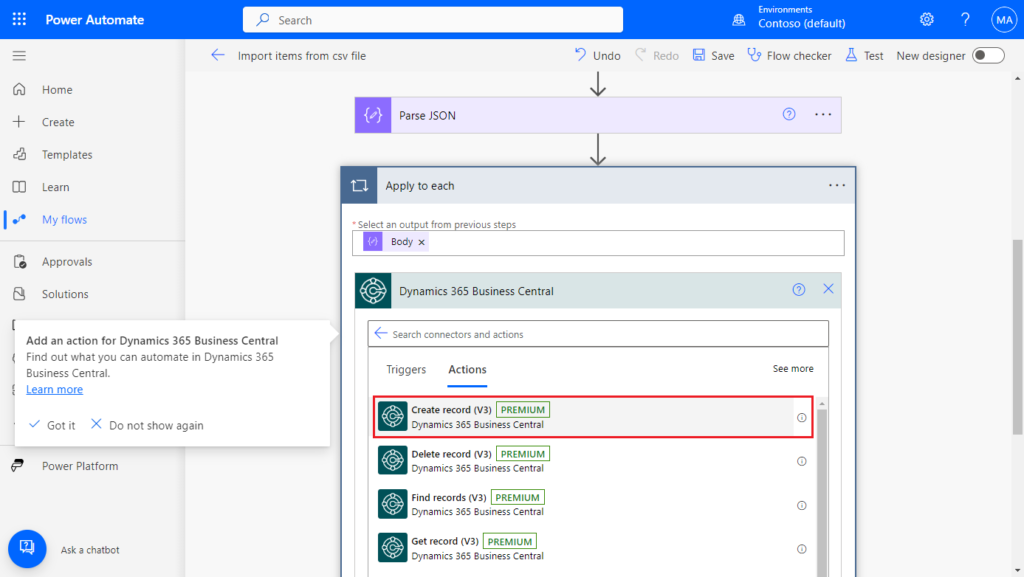

Click Add an action in Apply to each. Search for Business Central, and click on it.

We need to create items, so this time we choose Create record (V3)

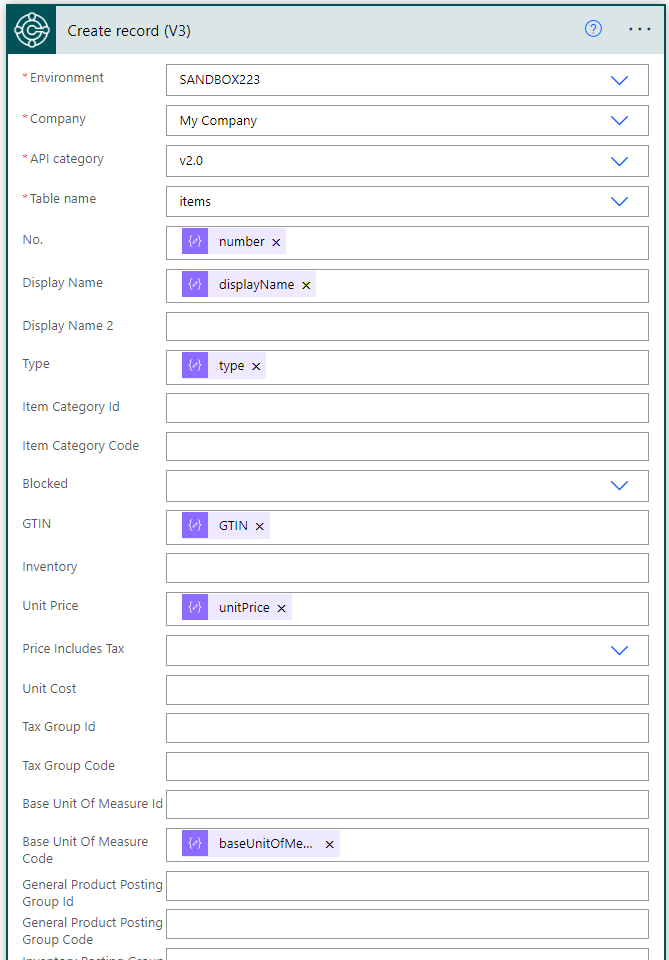

Select the BC environment that needs to be connected and the API that needs to be used. Custom APIs can also be used here.

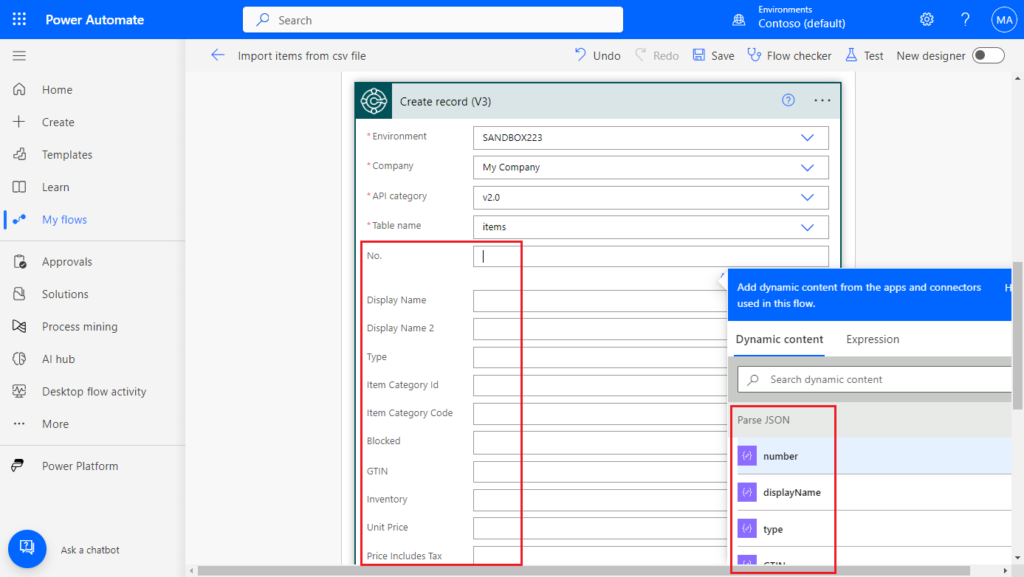

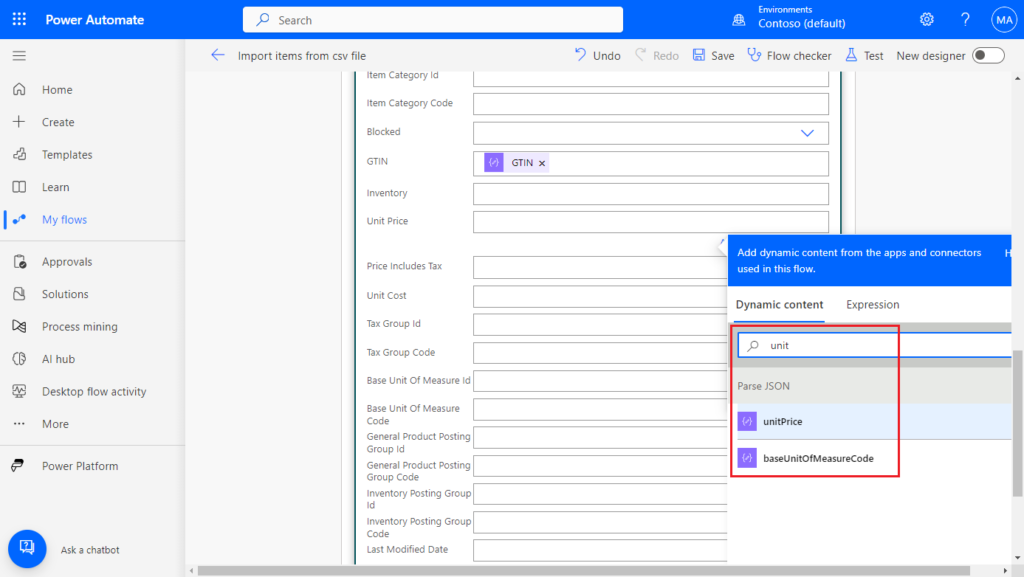

Then select the field of Parse JSON to the corresponding field of the API.

We can also search for it.

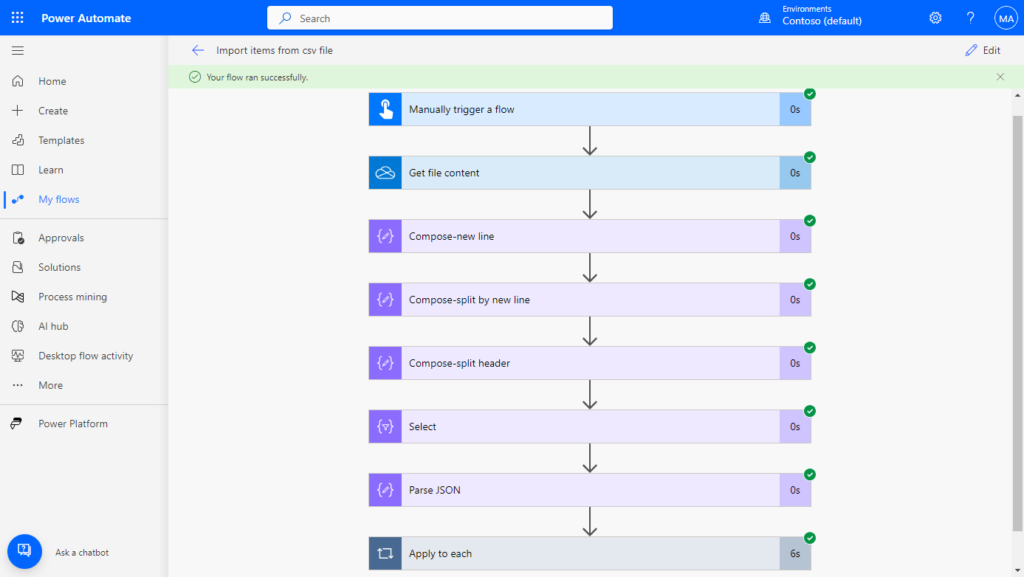

That’s all. Save the flow and test it again.

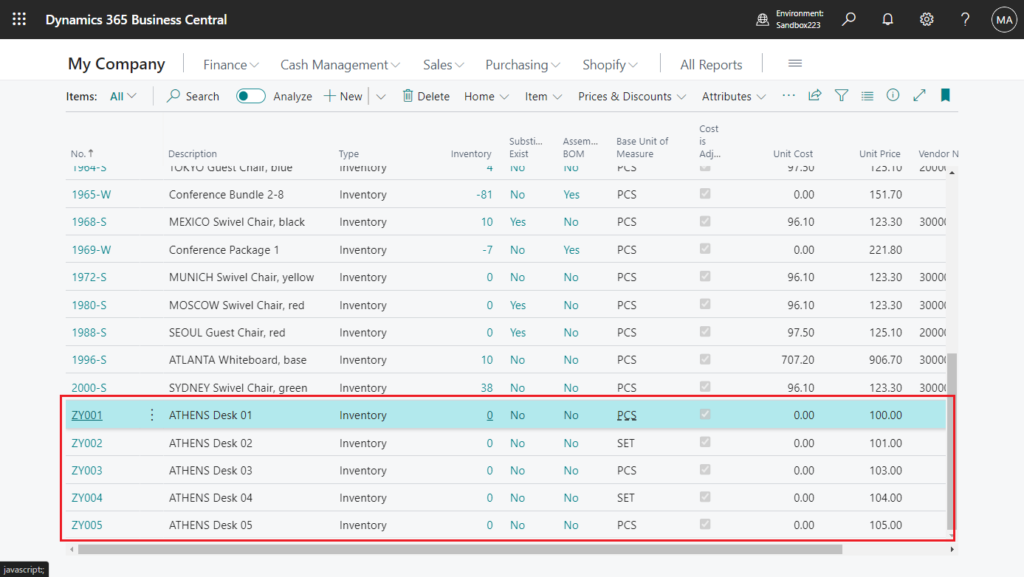

Great.

Test video:

Very simple, give it a try!!!😁

Download My Test flow:

PS:

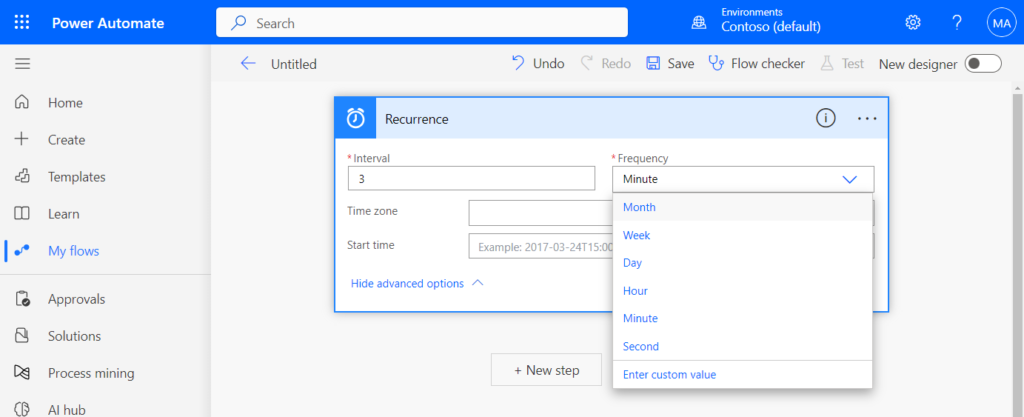

1. If you need to run the flow automatically, you can use the trigger below.

2. You can use actions provided by other partners, but some are not free.

3. Automatically create a new customer when receiving an email via Power Automate (No customization)

5. How to do “if Record.Find then” in Power Automate (Check if the record already exists or not)

6. The same approach also works for Azure Logic Apps.

END

Hope this will help.

Thanks for reading.

ZHU

コメント